At Tessian, our engineering teams work to ensure that our backend systems have the capability to handle the workloads required by our clients. We do this in lots of industry-standard ways: continuous integration pushed to a continuous-use staging environment, unit and module tests, integration tests and high-load simulations.

On the Node.is team, we needed a load testing service which could replicate email traffic above and beyond the 9 am problem (when everyone logs on at work and sends replies to their emails received overnight). Off-the-shelf load testers are typically designed for REST API traffic — hitting a server with http(s) requests until it breaks. We needed something smarter. Something that could generate high network traffic and still have the capacity to hold a responsive SMTP conversation for each connection.

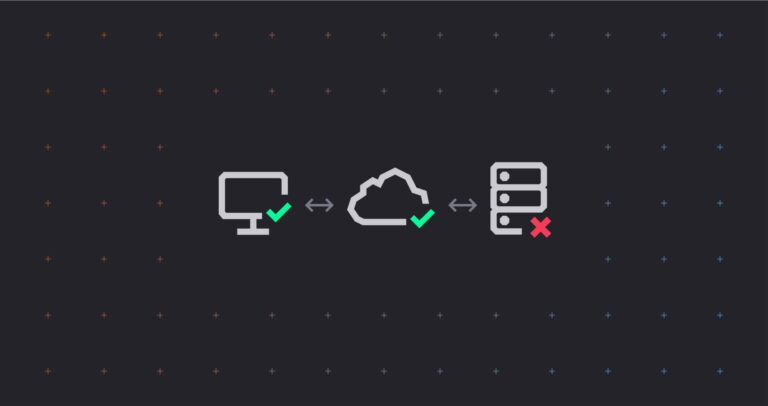

Like all good engineering projects, we began with the simplest of setups: using swaks to generate and send emails (the source) and a simple instance of Haraka (an SMTP mail server) running on Node.js to receive the traffic (the sink). Running the source and sink on separate AWS compute instances gave us a trivial-to-setup, rampable load tester. Executing swaks on a single core can generate and send around 27 emails/second. Coding a simple bash script to launch swaks processes across dozens of cores (AWS compute instances can give you up to 72 virtual cores) should have provided us with a cool 27 x 72 = 1944 emails/second.

Of course, it didn’t.

There are some basic overheads in this simple setup. Swaks is a perl script, so each time a message is sent, a new perl process needs to be started, the script interpreted and the process terminated. On the sink side, Haraka does quite a lot of processing of each email it receives — parsing the headers and message body, checking address formats and so on — none of which we really needed for our purposes. The overall throughput came out at around 450 emails/second. Not a bad start, but we felt like we could do better.

First we replaced the Haraka sink with a much simpler Node.js server. We coded a net.Server instance and implemented responses for the 4 basic SMTP commands: MAIL FROM, RCPT TO, DATA and QUIT. We didn’t include any validation of the received data — we run different tests for that — because we wanted pure performance. The server recorded various statistics along the way (clock time, data transfer rate, active connection numbers, etc) and console.log()’ed them out each time it received an email. In its entirety, the completely functional (but not exactly RFC-compliant) Node.js SMTP sink server was coded in just 9 functions and 200 lines.

Back to the test. Re-running the 72-core swaks script with the new Node.js sink didn’t do much to help the maximum rate with small messages (which still peaked at around 450 emails/second); it did, however, make a big difference with larger messages. By losing the message parsing on the sink side, Node was able to make full use of its multi-connection network streaming capability and keep the maximum incoming rate for multi-megabyte messages.

Looking at the server load figures, it was clear that the sink server was busy — but not too busy. The numbers of active connections were averaging just 6 with small bursts into the dozens. Time to focus on the source.

Coding a new Node.js module to load and send emails over SMTP was simple enough. Around 100 lines of code later, a fully functional sending script, complete with terminal-configurable options to choose the size of message and destination server was built. Firing up an instance of it on a single core achieved a pretty smart 1426 emails/second (10K messages transferred in 7.01 seconds). We then fired up sending instances across increasing numbers of cores until we plateaued at ~4700 emails/second — more than 10x over the first setup. For context, that’s more than our company’s total current internal email traffic over a 24 hour period, squashed down to 1 second.

This is one of many reasons we love using Node.js; its ease and efficiency in handling high-performance network connections is unrivaled, and without it, it’s difficult to imagine the lengths we’d need to go to in order to achieve simple high-throughput load testing of our email servers. Of course, the load tester is still being worked on (there’s more to squeeze out of it), but for now, we’re pretty happy with its performance.

#engineering