Tessian Blog

Subscribe to our blog

Industry insights, straight to your inbox every week

- All Categories

- ...

-

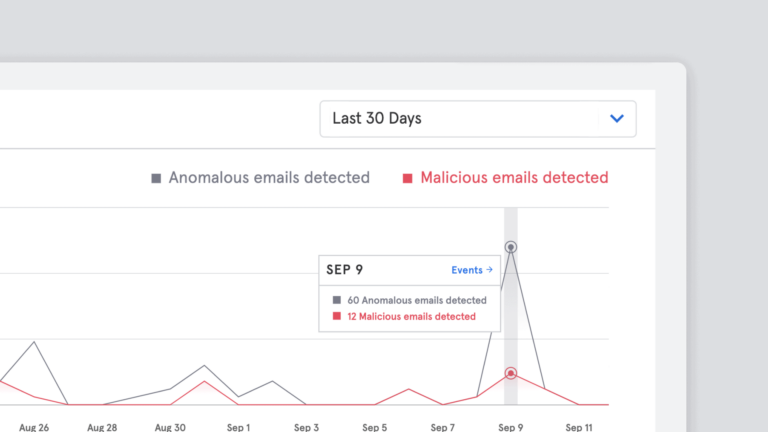

Integrated Cloud Email Security, Advanced Email Threats

Integrated Cloud Email Security, Advanced Email ThreatsProduct Update: Enhanced Security Event Filtering and Reporting

-

Advanced Email Threats

Advanced Email ThreatsThe Three Biggest Problems Facing Law Firm Security Leaders Right Now

-

Integrated Cloud Email Security, Advanced Email Threats, Data & Trends

Integrated Cloud Email Security, Advanced Email Threats, Data & TrendsProduct Update: Improvement to Algorithms Sees 15% Increase in Detection of Advanced Email Threats

-

Advanced Email Threats

Advanced Email ThreatsWhen a Breach is More Than Just a Breach

-

Advanced Email Threats

Advanced Email Threats52% of U.S. Healthcare Insurance Providers At Risk of Email Impersonation During Open Enrollment

-

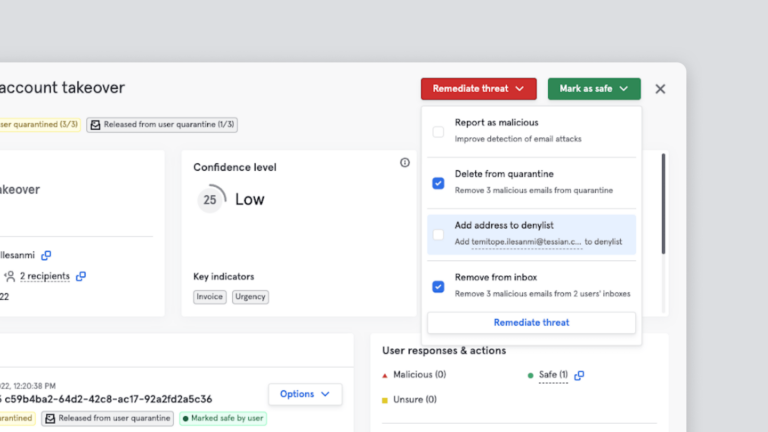

Integrated Cloud Email Security

Integrated Cloud Email SecurityProduct Update: Enhanced Event Triage to Speed Up Detection and Response to Malicious Emails

-

Threat Stories

Threat StoriesTessian Threat Intel

-

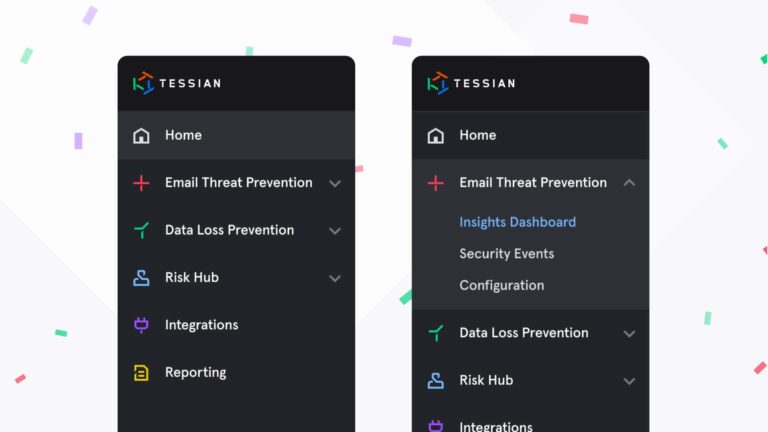

Integrated Cloud Email Security

Integrated Cloud Email SecurityProduct Update: Tessian Enhances Portal Navigation to Help Security Teams Respond to Incidents Faster

-

Integrated Cloud Email Security

Integrated Cloud Email SecurityPhishing, Email Breaches and Multi-Factor Authentication Compromise Take Center Stage at Black Hat 2022

-

Interviews With CISOs, Podcast, Compliance

Interviews With CISOs, Podcast, ComplianceLola Obamehinti on What Good Security Awareness Training Looks Like

-

Customer Stories

Customer StoriesPreventing Data Exfiltration at a FTSE 100 Tech Company

-

Interviews With CISOs, Integrated Cloud Email Security

Interviews With CISOs, Integrated Cloud Email SecurityHot Takes: 8 Ways to Strengthen the CISO and CFO Relationship

-

Email DLP, Advanced Email Threats

Email DLP, Advanced Email ThreatsKey Takeaways from IBM’s 2022 Cost of a Data Breach Report

-

Integrated Cloud Email Security, Email DLP

Integrated Cloud Email Security, Email DLPTessian Recognized as a Representative Vendor in the 2022 GartnerⓇ Market Guide for Data Loss Prevention

-

Integrated Cloud Email Security

Integrated Cloud Email SecurityHow to use phishing tests and in-the-moment cybersecurity awareness training to strengthen your cyber defenses

-

Threat Stories

Threat StoriesTessian Threat Intel Roundup: July 2022

-

New Study from Forrester Consulting: The Total Economic Impact™ of Tessian Cloud Email Security Platform

-

Integrated Cloud Email Security, Advanced Email Threats

Integrated Cloud Email Security, Advanced Email ThreatsHow to Prepare for Increasing Cyber Risk

-

Email DLP, Integrated Cloud Email Security, Advanced Email Threats

Email DLP, Integrated Cloud Email Security, Advanced Email ThreatsWhat is an Integrated Cloud Email Security (ICES) Solution?

-

Threat Stories

Threat StoriesTessian Threat Intel Roundup for June