Tessian Blog

Subscribe to our blog

Industry insights, straight to your inbox every week

- All Categories

- ...

-

Product Updates

Product UpdatesWhat’s New – Attack Detection with LLMs, Internal Account Takeover Defense, and Integration with Palo Alto Cortex XSOAR

-

Proofpoint Closes Acquisition of Tessian

-

How Tessian Uses Apache Iceberg to Stop Advanced Email Threats

-

Product Updates

Product UpdatesWhat’s new — Advanced query, keyword search, and remediation via API

-

Tessian + Microsoft: Better Together

-

What’s new — QR Code Phishing Detection, Optical Character Recognition, and Faster Search

-

Evolution of AI-Powered Email Defense: Proofpoint Announces Intent to Acquire Tessian

-

5 Trending Topics on the Future of Email Security

-

Advanced Email Threats, Product Updates

Advanced Email Threats, Product UpdatesTessian stopped over 3,000 QR code phishing attacks in just 1 day

-

Product Updates

Product UpdatesWhat’s New – Enhanced API, Lightning Search and Updated Navigation

-

The ICO expands GDPR guidance to recommend a data loss prevention tool

-

Product Updates

Product UpdatesTessian Launches Automated Email Threat Remediation for Security Teams

-

Product Updates

Product UpdatesWhat’s new: Abuse Mailbox Response, APIs, and dashboards

-

Integrated Cloud Email Security

Integrated Cloud Email SecurityFrom on-prem to cloud: Supporting your email security journey

-

Integrated Cloud Email Security, Life at Tessian

Integrated Cloud Email Security, Life at TessianTessian Named a Strong Performer in Enterprise Email Security by Independent Research Firm

-

Beyond the SEG / Microsoft + Tessian, Integrated Cloud Email Security, Advanced Email Threats, Security Awareness Coaching

Beyond the SEG / Microsoft + Tessian, Integrated Cloud Email Security, Advanced Email Threats, Security Awareness CoachingLatest Microsoft Report Confirms Need for AI-Based Phishing Protection

-

Advanced Email Threats, Product Updates

Advanced Email Threats, Product UpdatesUnlock Email Security Visibility Within Splunk

-

Life at Tessian

Life at TessianTessian Launches Advanced Email Threat Response Capabilities for Security Teams

-

Life at Tessian

Life at TessianTessian is First Email Security Platform to Fully Integrate with M365 To Provide Threat Protection and Insider Risk Protection

-

Integrated Cloud Email Security, Product Updates

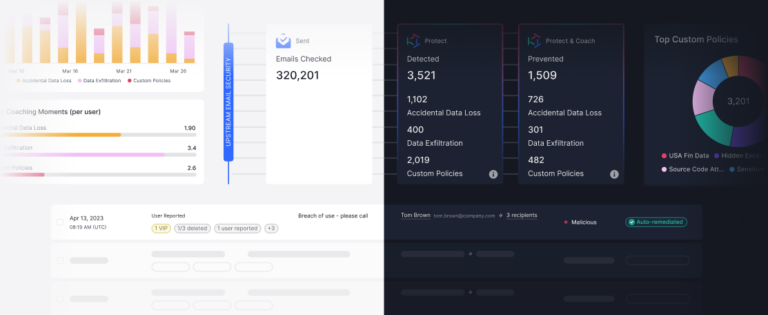

Integrated Cloud Email Security, Product UpdatesRespond Faster. Prevent More.