Tessian Blog

Subscribe to our blog

Industry insights, straight to your inbox every week

- All Categories

- ...

-

Beyond the SEG / Microsoft + Tessian, Product Updates

Beyond the SEG / Microsoft + Tessian, Product UpdatesTessian Launches Complete M365 Integration

-

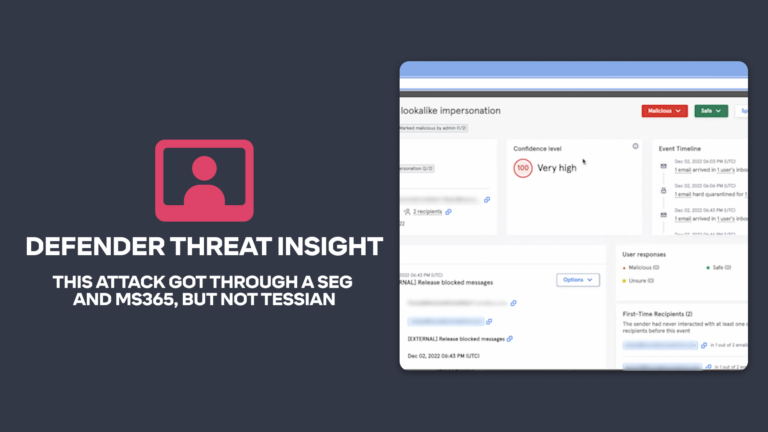

Beyond the SEG / Microsoft + Tessian, Advanced Email Threats

Beyond the SEG / Microsoft + Tessian, Advanced Email ThreatsTessian in Action: This Attack Got Through a SEG and M365, but Not Tessian.

-

Advanced Email Threats

Advanced Email ThreatsTessian in Action: Phishing Attack Sends Credentials to Telegram

-

Beyond the SEG / Microsoft + Tessian, Advanced Email Threats, Threat Stories

Beyond the SEG / Microsoft + Tessian, Advanced Email Threats, Threat StoriesTessian in Action: Microsoft Credential Scraping Attempt

-

Beyond the SEG / Microsoft + Tessian

Beyond the SEG / Microsoft + TessianTessian Recognized as a Representative Vendor in the 2023 Gartner® Market Guide for Email Security

-

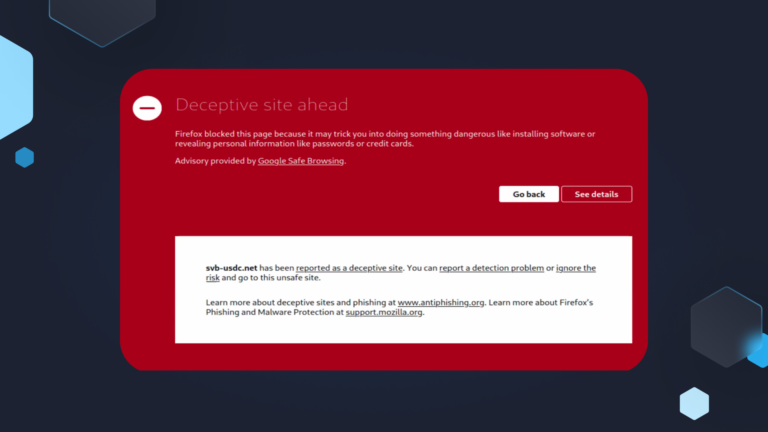

Advanced Email Threats, Attack Types, Threat Stories

Advanced Email Threats, Attack Types, Threat StoriesDozens of SVB and HSBC-themed URLs Registered

-

Advanced Email Threats, Attack Types, Threat Stories

Advanced Email Threats, Attack Types, Threat StoriesThe Current SVB Banking Crisis Will Increase Cyberattacks, Here’s How to Prepare

-

Beyond the SEG / Microsoft + Tessian, Advanced Email Threats

Beyond the SEG / Microsoft + Tessian, Advanced Email ThreatsWhy You Should Download the Microsoft 365 + Tessian Guide

-

Insider Risks

Insider RisksTaking a Modern Approach to Insider Risk Protection on Email

-

Life at Tessian, Engineering Blog

Life at Tessian, Engineering BlogOur VP of Engineering on Tessian’s Mission and His First 90 Days in the Role

-

Advanced Email Threats, Compliance

Advanced Email Threats, ComplianceWill Australia’s Tougher Cyber Regulation Force Firms to Upgrade Their Security?

-

Life at Tessian

Life at TessianA decade in the making, but the best is yet to come.

-

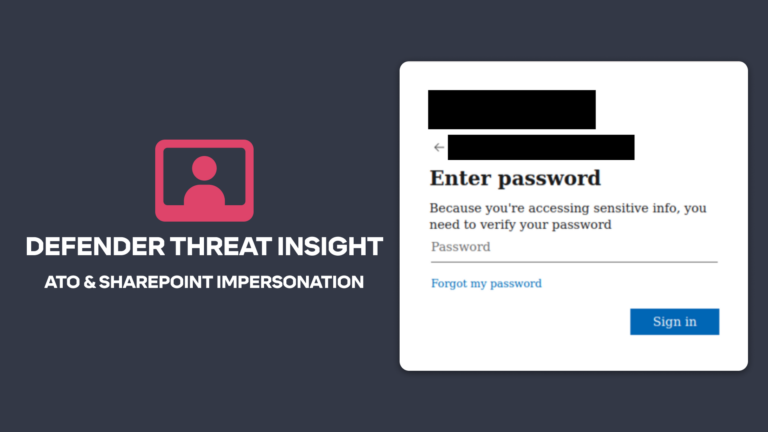

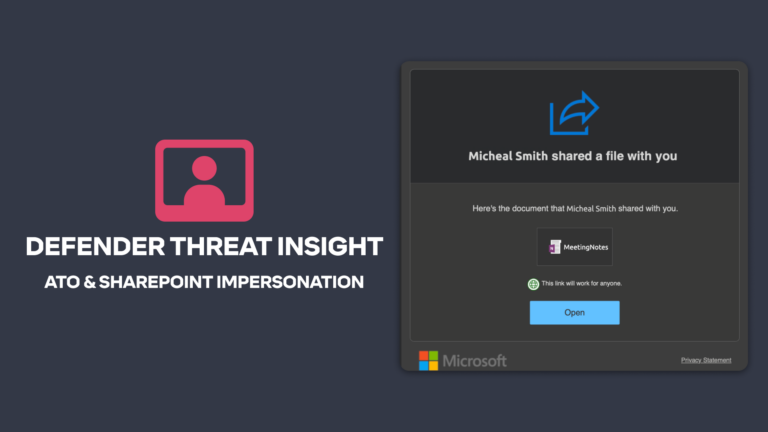

Advanced Email Threats

Advanced Email ThreatsTessian in Action: Account Takeover & SharePoint File Share Attack

-

Email DLP, Insider Risks

Email DLP, Insider RisksReal Examples of Negligent Insider Risks

-

Email DLP, Insider Risks

Email DLP, Insider RisksWhat is a malicious insider? What are the threats from malicious insiders to your organization – and how can you minimize those risks?

-

Email DLP, Attack Types, Advanced Email Threats, Insider Risks

Email DLP, Attack Types, Advanced Email Threats, Insider RisksPreventing ePHI Breaches over Email for Healthcare Organizations

-

Email DLP, Integrated Cloud Email Security, Advanced Email Threats

Email DLP, Integrated Cloud Email Security, Advanced Email ThreatsSecure Email Gateways (SEGs) vs. Integrated Cloud Email Security (ICES) Solutions

-

Advanced Email Threats

Advanced Email Threats15 Examples of Real Social Engineering Attacks

-

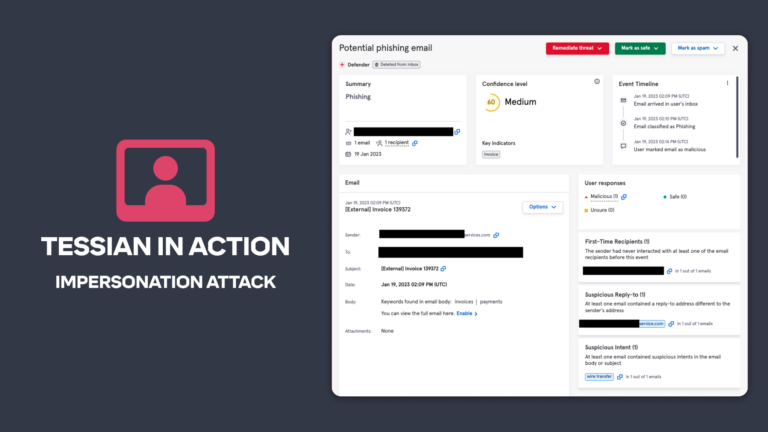

Integrated Cloud Email Security, Advanced Email Threats

Integrated Cloud Email Security, Advanced Email ThreatsTessian in Action: Stopping an Impersonation Attack

-

Advanced Email Threats

Advanced Email ThreatsThe Time for Cloud Email Security is Now: Microsoft 365 + Tessian