Email DLP

- All Categories

- ...

-

Email DLP, Insider Risks

Email DLP, Insider RisksWhat is Data Exfiltration? Tips for Preventing Data Exfiltration

-

Email DLP, Insider Risks

Email DLP, Insider RisksWhy Taking Your Work With You When You Leave a Company Isn’t a Smart Idea

-

Email DLP, Remote Working, Insider Risks

Email DLP, Remote Working, Insider RisksHow the Great Resignation is Creating More Security Challenges

-

Email DLP

Email DLPWhy Email Security is a Top Cybersecurity Control

-

Email DLP, Insider Risks

Email DLP, Insider RisksWhen Your Best DLP Rules Still Aren’t Good Enough…

-

Email DLP, Remote Working, Insider Risks

Email DLP, Remote Working, Insider RisksKeeping Your Data Safe During The Great Re-Evaluation

-

Email DLP, Interviews With CISOs

Email DLP, Interviews With CISOsQ&A with Punit Rajpara, Head of IT and Business Systems at GoCardless

-

Email DLP, Integrated Cloud Email Security, Advanced Email Threats

Email DLP, Integrated Cloud Email Security, Advanced Email ThreatsA Year in Review: 2021 Product Updates

-

Email DLP, Integrated Cloud Email Security, Customer Stories

16 Ways to Get Buy-In For Cybersecurity Solutions

-

Email DLP

Email DLPThe Ultimate Guide to Data Loss Prevention

-

Email DLP

Email DLPWhy Email Encryption Isn’t Enough: The Need for Intelligent Email Security

-

Email DLP, Integrated Cloud Email Security, Advanced Email Threats

Email DLP, Integrated Cloud Email Security, Advanced Email ThreatsTessian Recognized as a Representative Vendor in 2021 Gartner® Market Guide for Email Security

-

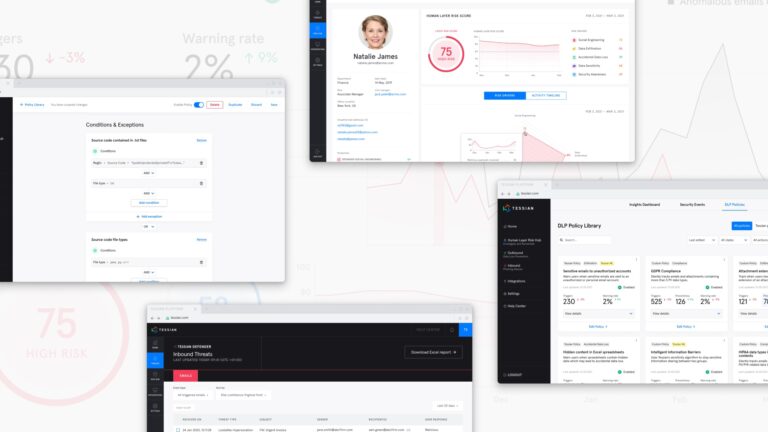

Email DLP

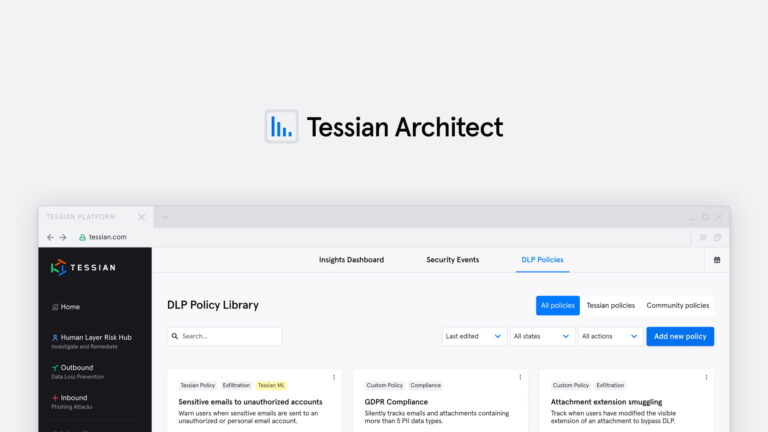

Email DLPIntroducing Tessian Architect: The Industry’s Only Intelligent Data Loss Prevention Policy Engine

-

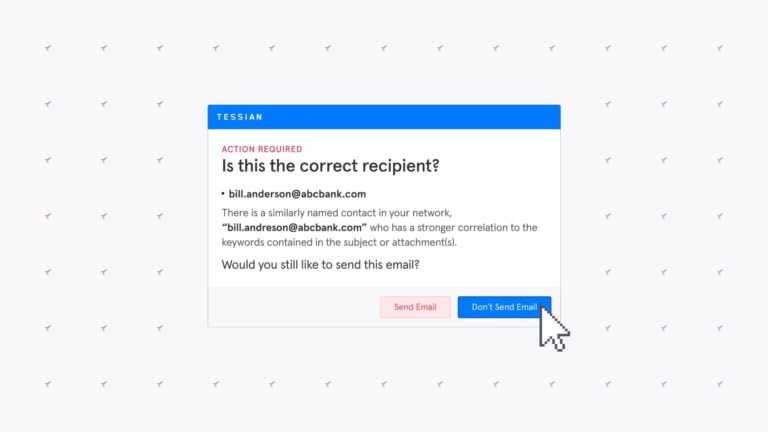

Email DLP, Integrated Cloud Email Security, Insider Risks, Compliance

Email DLP, Integrated Cloud Email Security, Insider Risks, ComplianceYou Sent an Email to the Wrong Person. Now What?

-

Email DLP, Customer Stories

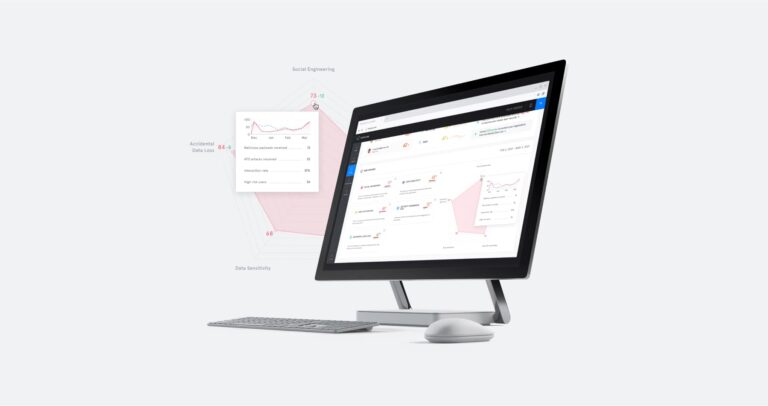

Email DLP, Customer StoriesCustomer Story: How Tessian Helped a Private Equity Firm Achieve Threat Visibility Through A Platform Approach

-

Email DLP, Advanced Email Threats

Email DLP, Advanced Email ThreatsNew ESG Report Highlights Gaps in M365 Native Security Tools

-

Email DLP, Customer Stories

Email DLP, Customer StoriesCustomer Story: How Tessian Combines Data Loss Prevention With Education in Financial Services

-

Email DLP

Email DLPHow to Close Critical Data Loss Prevention (DLP) Gaps in Microsoft 365

-

Email DLP, Integrated Cloud Email Security

Email DLP, Integrated Cloud Email SecurityLegacy Data Loss Prevention vs. Human Layer Security

-

Email DLP, Advanced Email Threats, Compliance

Email DLP, Advanced Email Threats, Compliance5 Cyber Risks In Manufacturing Supply Chains