Integrated Cloud Email Security

- All Categories

- ...

-

Email DLP, Integrated Cloud Email Security, Advanced Email Threats

Email DLP, Integrated Cloud Email Security, Advanced Email ThreatsBuyer’s Guide to Integrated Cloud Email Security

-

Integrated Cloud Email Security, Advanced Email Threats

Integrated Cloud Email Security, Advanced Email ThreatsNation-States – License to Hack?

-

Integrated Cloud Email Security, Advanced Email Threats

Integrated Cloud Email Security, Advanced Email ThreatsPlaying Russian Roulette with Email Security: Why URL Link Rewriting Isn’t Effective

-

Life at Tessian, Integrated Cloud Email Security, Advanced Email Threats, Engineering Blog

Life at Tessian, Integrated Cloud Email Security, Advanced Email Threats, Engineering BlogWhy Confidence Matters: How We Improved Defender’s Confidence Scores to Fight Phishing Attacks

-

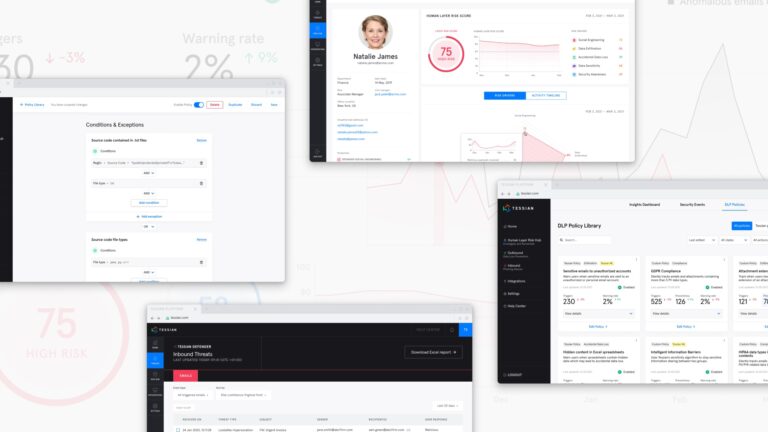

Email DLP, Integrated Cloud Email Security, Advanced Email Threats

Email DLP, Integrated Cloud Email Security, Advanced Email ThreatsA Year in Review: 2021 Product Updates

-

Integrated Cloud Email Security, Advanced Email Threats

Integrated Cloud Email Security, Advanced Email ThreatsProduct Integration News: Tessian + KnowBe4 = Tailored Phishing Training

-

Email DLP, Integrated Cloud Email Security, Customer Stories

16 Ways to Get Buy-In For Cybersecurity Solutions

-

Interviews With CISOs, Integrated Cloud Email Security, Advanced Email Threats

Interviews With CISOs, Integrated Cloud Email Security, Advanced Email ThreatsAll Cybersecurity 2022 Trend Articles Are BS, Here’s Why

-

Integrated Cloud Email Security

Integrated Cloud Email SecurityFive Reasons Why Enterprise Sales Engineers Are At Higher Risk From Misdirected Emails

-

Email DLP, Integrated Cloud Email Security, Advanced Email Threats

Email DLP, Integrated Cloud Email Security, Advanced Email ThreatsTessian Recognized as a Representative Vendor in 2021 Gartner® Market Guide for Email Security

-

Integrated Cloud Email Security

Integrated Cloud Email SecurityNew Forrester Consulting Research Shows Human Layer Security is the Solution Security Leaders Have Been Looking For

-

Integrated Cloud Email Security

Integrated Cloud Email SecuritySeven Things We Learned at Our Fall Human Layer Security Summit

-

Life at Tessian, Integrated Cloud Email Security

Life at Tessian, Integrated Cloud Email SecurityTessian Announces Allen Lieberman as its Chief Product Officer

-

Integrated Cloud Email Security

Integrated Cloud Email SecurityIntegration Announcement: Tessian + Okta

-

Integrated Cloud Email Security

Integrated Cloud Email SecurityFear Isn’t The Motivator We Think It Is…

-

Integrated Cloud Email Security

Integrated Cloud Email SecurityHere’s What’s Happening at our SIXTH Human Layer Security Summit on Nov 4th

-

Integrated Cloud Email Security

Integrated Cloud Email SecurityNew Technology Integration: Sumo Logic Tessian App

-

Email DLP, Integrated Cloud Email Security, Insider Risks, Compliance

Email DLP, Integrated Cloud Email Security, Insider Risks, ComplianceYou Sent an Email to the Wrong Person. Now What?

-

Integrated Cloud Email Security, Email DLP

Integrated Cloud Email Security, Email DLPLegacy Data Loss Prevention vs. Human Layer Security

-

Integrated Cloud Email Security, Advanced Email Threats

Integrated Cloud Email Security, Advanced Email ThreatsLegacy Phishing Prevention Solutions vs. Human Layer Security