Integrated Cloud Email Security

- All Categories

- ...

-

Integrated Cloud Email Security

Integrated Cloud Email SecurityTessian Partners with Optiv Security and Moves to a 100% Channel Model

-

Integrated Cloud Email Security, Email DLP

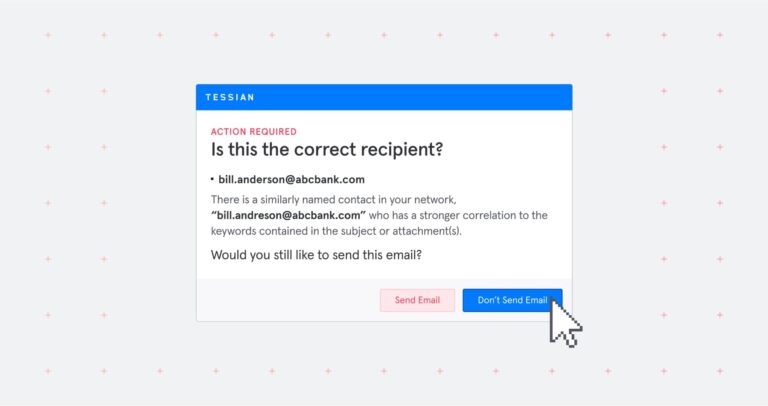

Integrated Cloud Email Security, Email DLPWhat is Email DLP? Overview of DLP on Email

-

Integrated Cloud Email Security, Email DLP, Advanced Email Threats, Compliance

Integrated Cloud Email Security, Email DLP, Advanced Email Threats, Compliance7 Ways CFOs Can (And Should) Support Cybersecurity

-

Integrated Cloud Email Security

Integrated Cloud Email Security5 Challenges Enterprise Customers Face With Security Vendors

-

Integrated Cloud Email Security

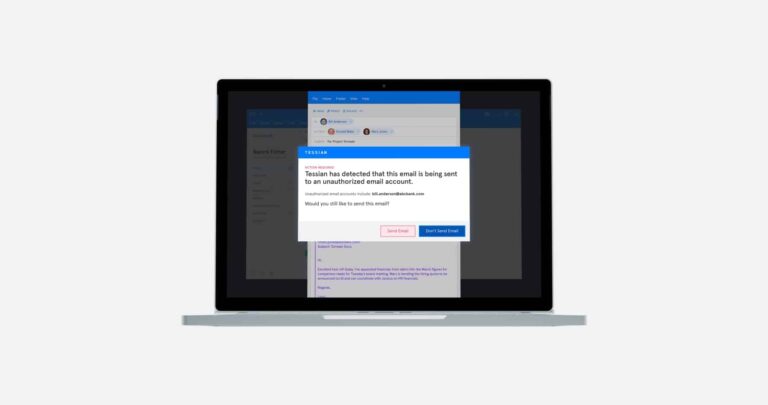

Integrated Cloud Email SecurityWhat are In-The-Moment Warnings and Why Are They Effective?

-

Integrated Cloud Email Security

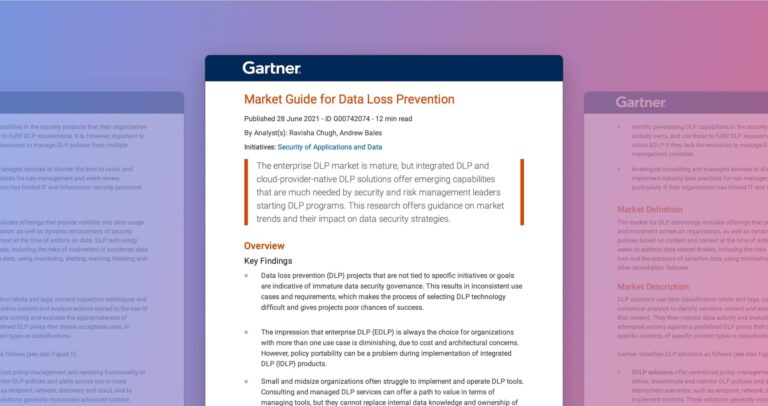

Integrated Cloud Email SecurityTessian Recognized as a Representative Vendor in 2021 Gartner Market Guide for Data Loss Prevention

-

Integrated Cloud Email Security

Integrated Cloud Email SecurityThe Ultimate Guide to Human Layer Security

-

Integrated Cloud Email Security

Integrated Cloud Email Security10 Cybersecurity Events & Webinars in July to Sign Up For

-

Integrated Cloud Email Security, Email DLP, Insider Risks

Integrated Cloud Email Security, Email DLP, Insider RisksWhat is an Insider Threat? Insider Threat Definition, Examples, and Solutions

-

Integrated Cloud Email Security

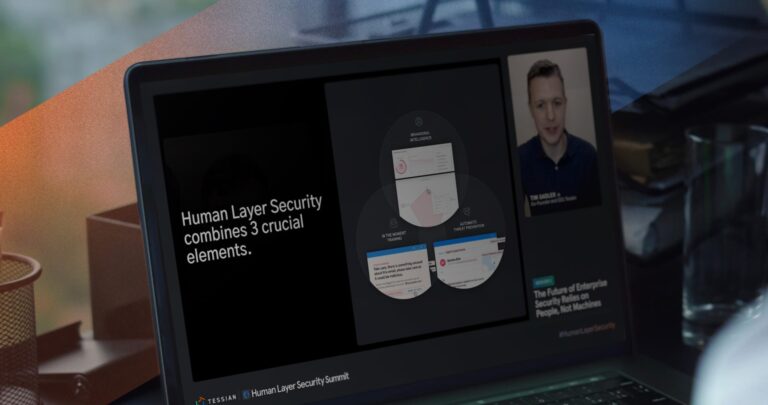

Integrated Cloud Email Security6 Insights From Tessian Human Layer Security Summit

-

Integrated Cloud Email Security, Advanced Email Threats

Integrated Cloud Email Security, Advanced Email ThreatsIs Your Office 365 Email Secure?

-

Integrated Cloud Email Security, Email DLP, Compliance

Integrated Cloud Email Security, Email DLP, ComplianceAt a Glance: Data Loss Prevention in Healthcare

-

Integrated Cloud Email Security, Life at Tessian

Integrated Cloud Email Security, Life at TessianAnnouncing our $65M Series C led by March Capital

-

Integrated Cloud Email Security

Integrated Cloud Email SecurityJune Human Layer Security Summit: Meet the Speakers

-

Integrated Cloud Email Security, Advanced Email Threats, Customer Stories

Integrated Cloud Email Security, Advanced Email Threats, Customer StoriesHow Tessian Reduced Click-Through Rates on Phishing Emails From 20% to Less Than 5%

-

Integrated Cloud Email Security, Email DLP, Insider Risks, Compliance

The State of Data Loss Prevention in the Financial Services Sector

-

Integrated Cloud Email Security, Podcast

Integrated Cloud Email Security, PodcastFive Things I Learned From Launching A Podcast

-

Integrated Cloud Email Security

Integrated Cloud Email SecurityMachine vs. Machine: Setting the Record Straight on Offensive AI

-

Integrated Cloud Email Security

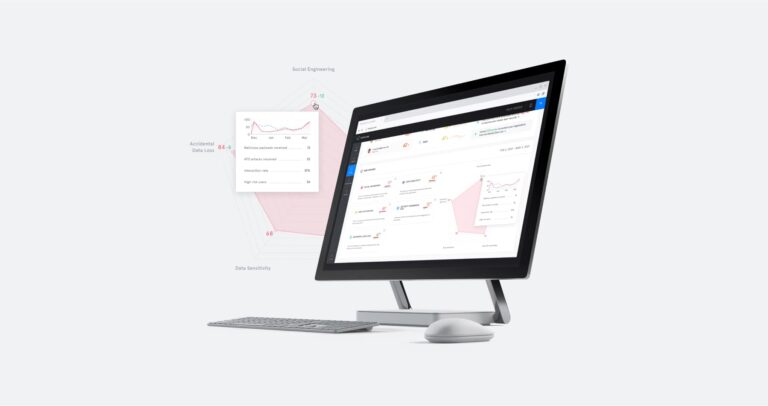

Integrated Cloud Email SecurityRisk Management Made Easy: Introducing Tessian Human Layer Risk Hub

-

Integrated Cloud Email Security, Advanced Email Threats

Integrated Cloud Email Security, Advanced Email ThreatsTypes of Email Attacks Every Business Should Prepare For